Generative UI vs Prompt to UI vs Prompt to Design

Fundamentally different outcomes of adjacent propositions.

As AI continues to reshape how software is created and used, three distinct but often conflated paradigms have emerged:

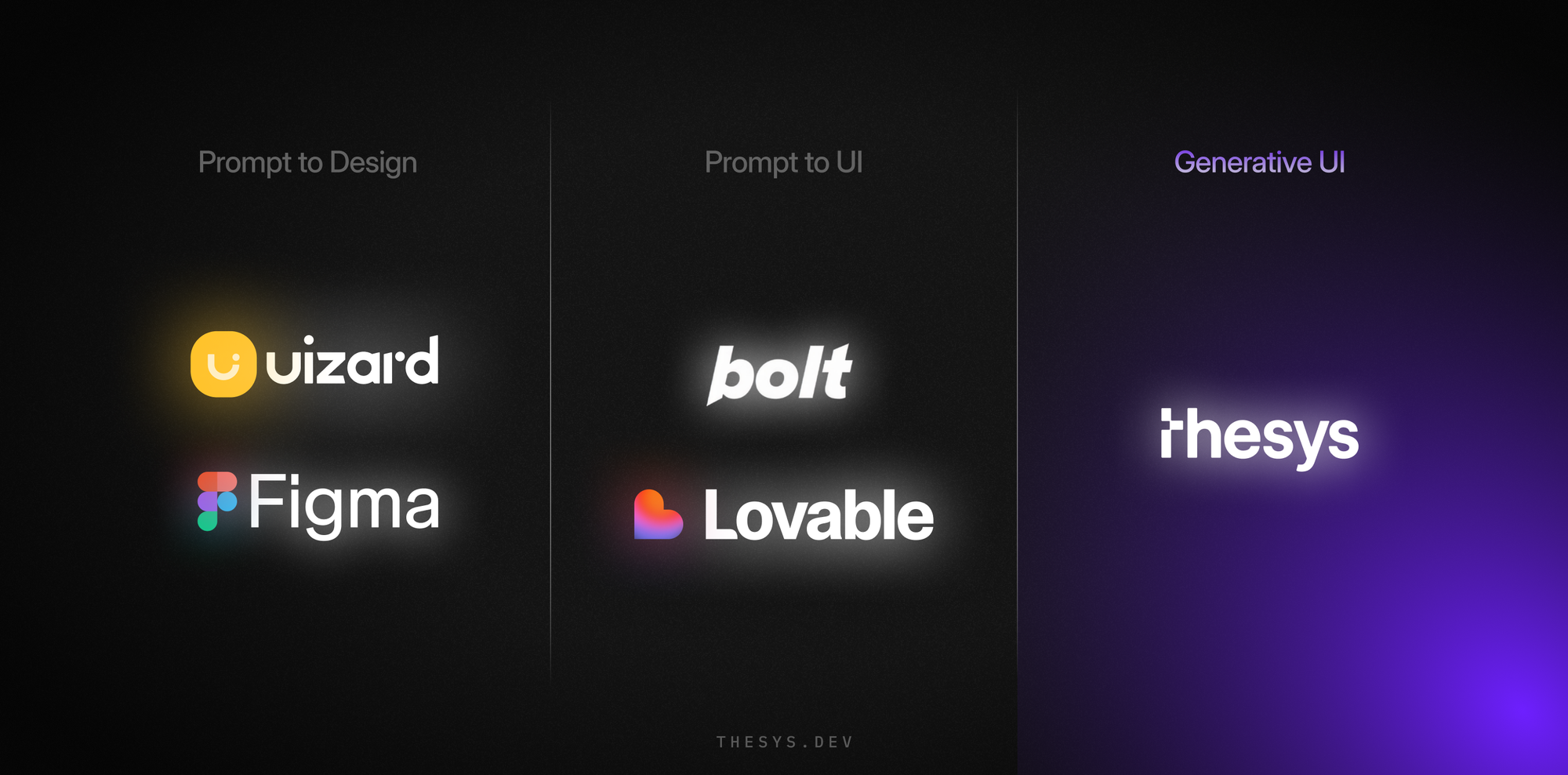

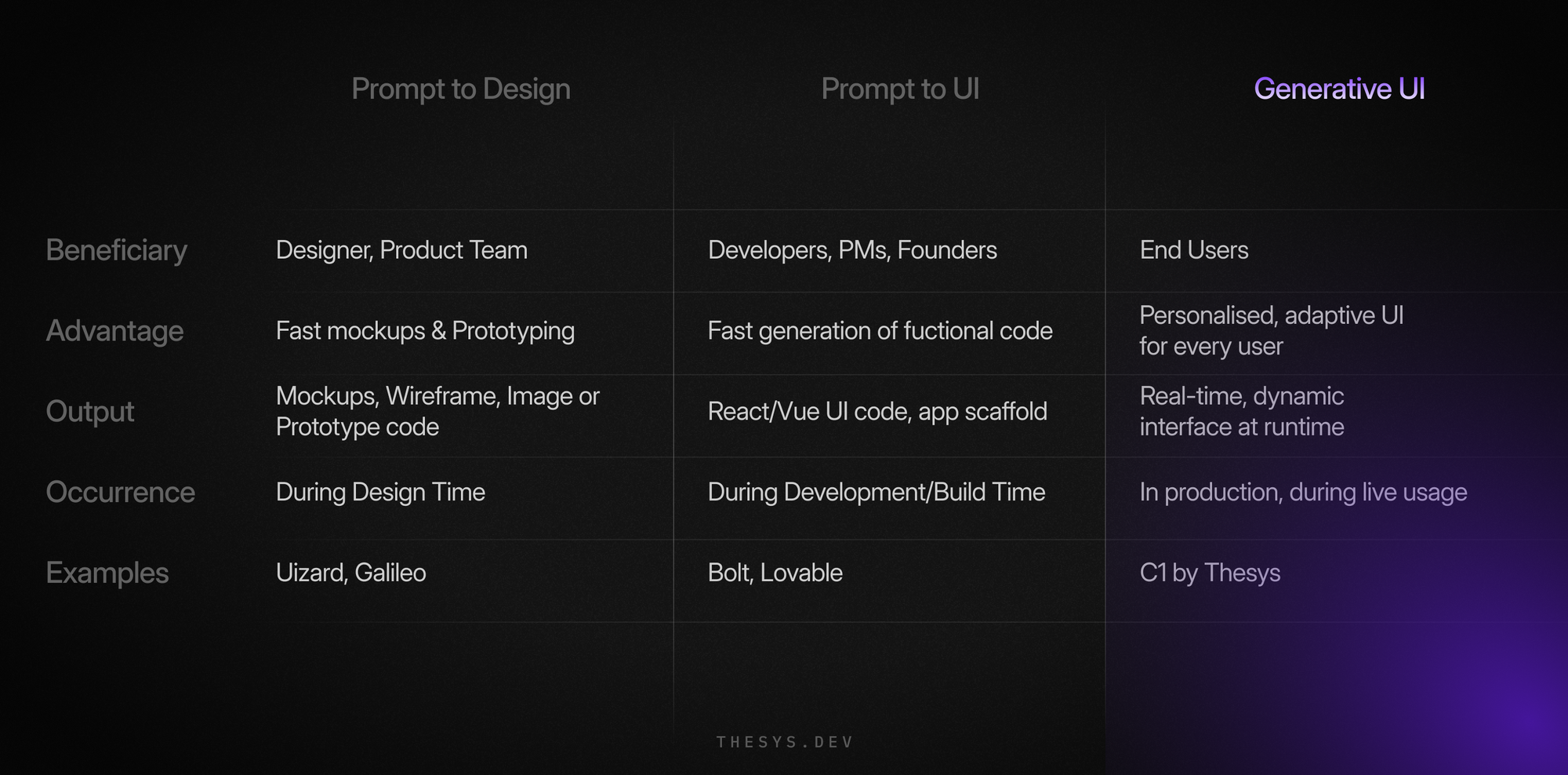

Prompt to Design | Prompt to UI | Generative UI

While they may sound similar they serve different users, operate at different stages of the product lifecycle, and produce different types of output. In this post, we’ll explore the differences between these approaches across key dimensions.

Also Known As

Let’s clarify the alternate terms often used interchangeably:

| Canonical Term | Also Known As |

|---|---|

| Prompt to Design | Vibe Designing, AI-Assisted Design, GenAI design, Text-to-Design, Design Generation |

| Prompt to UI | Prompt-to-Prototype, AI Prototyping, Text-to-UI, UI Generation |

| Generative UI | Runtime UI Generation, Personalized Interfaces, Intent-Based Interfaces, Autonmous interface |

Aspect-Based Comparison

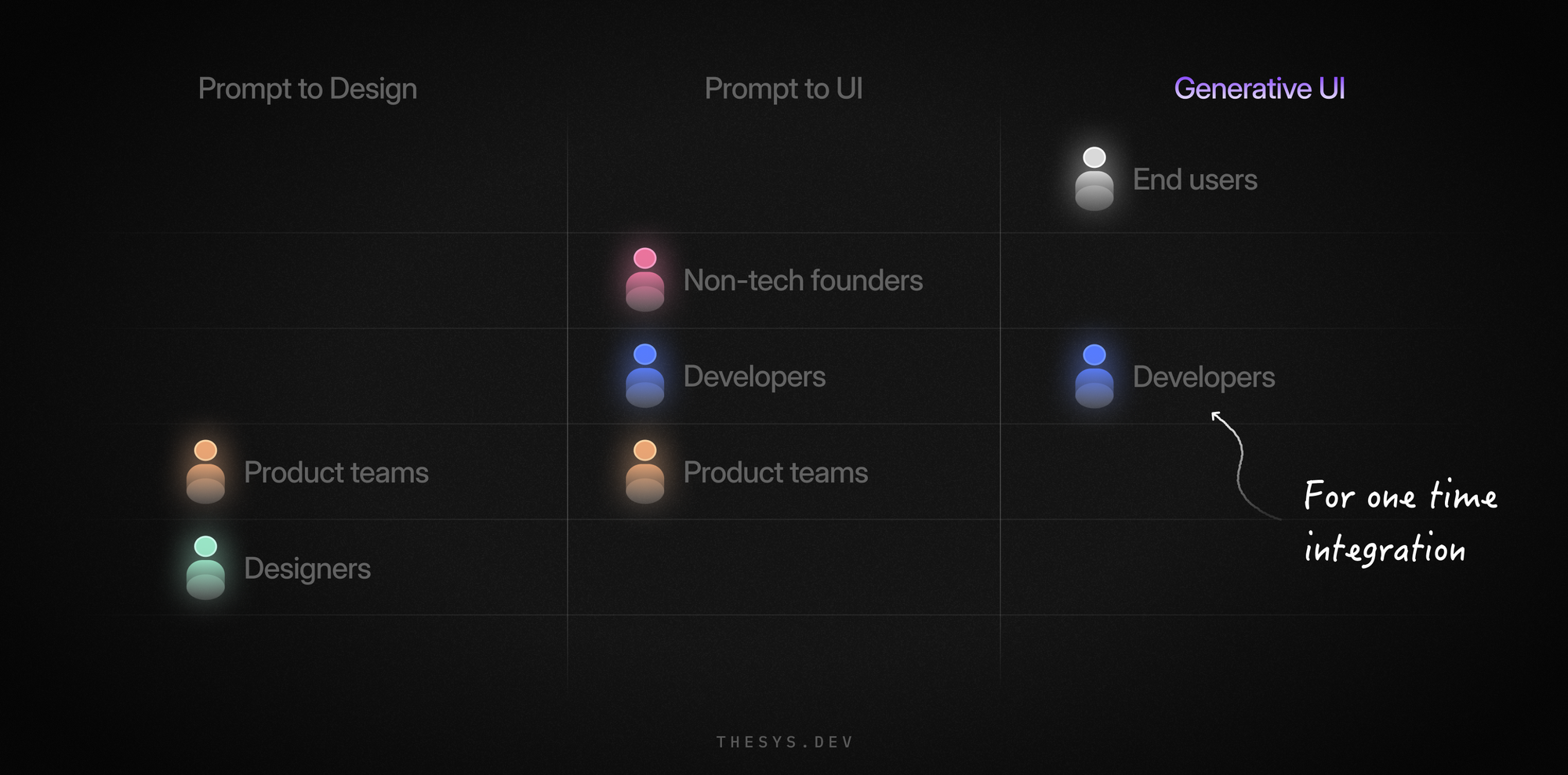

1. Who Benefits Most Here?

Prompt to Design is primarily used by designers and product teams to accelerate early design stages. These tools provide intelligent suggestions or automatically generate mockups.

Example: Uizard turns text or sketches into design mockups. Galileo AI or FigmaAI takes prompts and generates design-ready UI suggestions.

Prompt to UI targets developers, product managers, and non-technical founders. These tools generate working UI code, often including backend scaffolding, from natural-language prompts.

Example: Bolt, and Lovable, allow users to generate working React/Vue interfaces from a prompt.

Generative UI is a new kind of frontend infrastructure that flips the audience to the end user. Unlike traditional UI development, where developers handcraft static interfaces for every user type, Generative UI allows developers to integrate it once. From there, the system dynamically generates personalized interfaces in real time. It uses a combination of user context, behavioral signals, and intent to intelligently assemble layouts, components, and workflows on the fly. The result is a UI that adapts to each user’s needs and goals without requiring manual updates or rigid design flows.

Example: C1 by Thesys enables AI products to dynamically shape the UI based on each user’s intent and context.

2. What’s the Big Win?

Prompt to Design boosts speed during mockup creation and iteration, letting teams prototype quickly.

Example: Designers can type “dashboard with user stats and recent activity” and get multiple mockup suggestions instantly in Uizard or Galileo.

Prompt to UI dramatically accelerates frontend development. It turns ideas into executable UI code, reducing the need for manual frontend work.

Example: A product manager can say “a signup form with Google OAuth and dark mode” in Bolt, and it produces deployable React code within seconds.

Generative UI Generative UI delivers interfaces that are not just created in real time but are continuously tailored to each individual user. By using live context such as behavior, preferences, and intent, the system assembles layouts and content that fit each moment. Two users can open the same app and see entirely different experiences, each optimized for their needs. As users interact, the UI adapts by removing friction, guiding actions, and adjusting itself to feel intuitive and personal. It transforms software from a static experience into a dynamic and responsive system designed around the user.

Example: In a learning app powered by C1 by Thesys , the UI changes based on how stuck or advanced the user is, auto-adapting navigation, content layout, and assistance widgets.

3. What’s the End Result?

Prompt to Design outputs static mockups or design assets, which may be editable in Figma-like environments. Some tools output basic HTML/CSS.

Example: Figma creates a canvas with pre-placed components (buttons, headers, cards) ready for human refinement.

Prompt to UI produces runnable UI code - typically in React, Vue, or similar frontend frameworks. It may include APIs, state handling, and basic auth.

Example: Lovable lets a user say “CRM dashboard with table view and filters,” and outputs a codebase complete with routing and state logic.

Generative UI does not produce something static or permanent. Its output is a dynamic interface that is rendered at runtime and built on the fly for each user session. The layout, components, and content are generated in real time based on context, making the experience unique to each user every time they interact with the product.

Example: With C1 by Thesys , a travel booking agent might rearrange content (chat, form, map) based on the user's urgency, context, or past behavior.

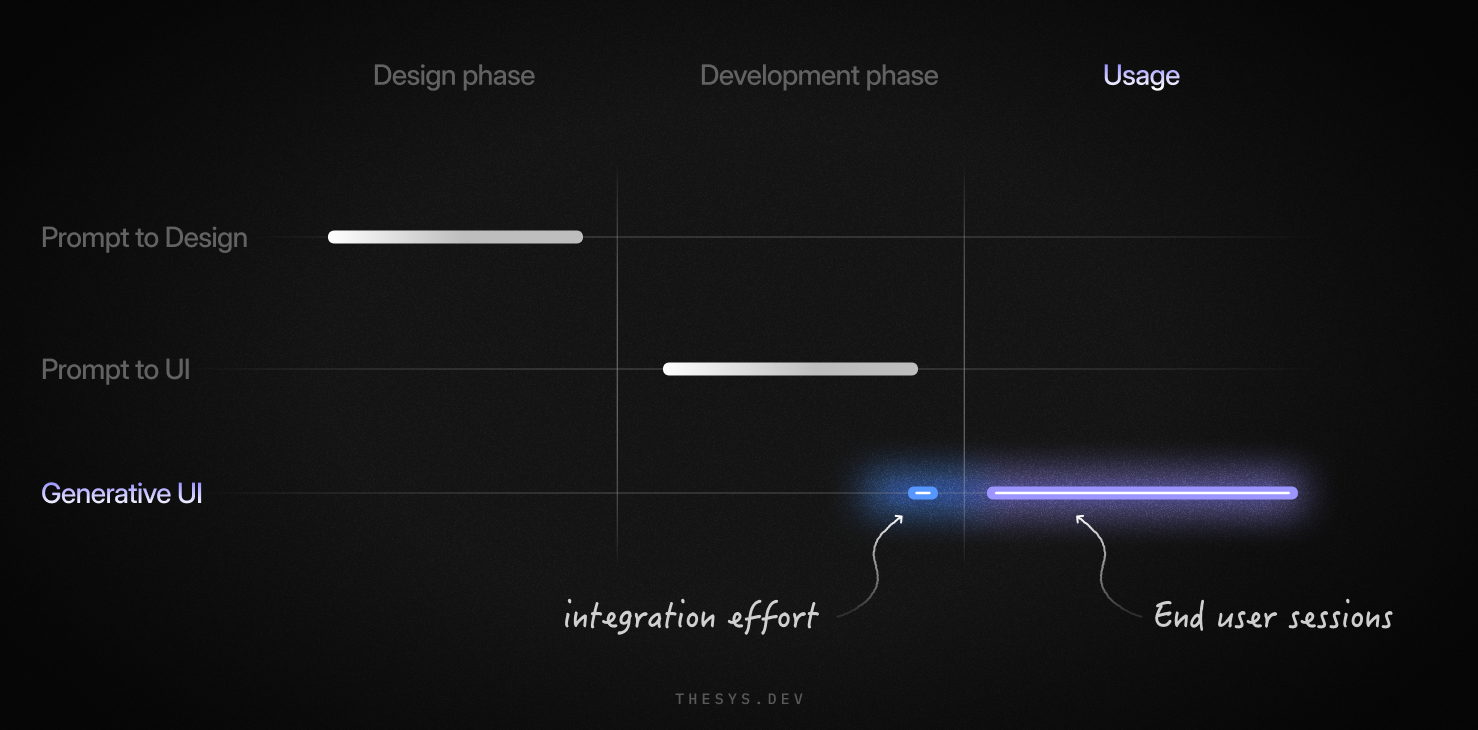

4. When Does the Magic Happen?

Prompt to Design runs during design process. It helps teams during early ideation or prototyping phases. It needs additional development effort to be pushed to production.

Example: Using AI inside Figma to generate 3 layout options from a brief.

Prompt to UI runs at build time, helping developers scaffold codebases before deployment. Before deployment it needs additional development effort to tie together data, backend and business logic.

Example: Vercel’s v0.dev turns prompts into JSX + Tailwind code that developers can immediately export and customize.

Generative UI operates in real time during end user interaction, responding instantly to changes in intent, context, or environment. It requires a one-time integration effort from Developers that is simple, fast, and often as easy as adding two lines of code. Once integrated, the system takes over. Its API dynamically designs, develops, and delivers interfaces tailored to each user and session. The result is a personalized and adaptive UI experience that evolves with every interaction.

Example: A financial dashboard powered by C1 by Thesys may adapt its layout based on the user’s profile e.g., showing investment tips to beginners, and risk analysis to experts.

5. What’s Under the Hood?

While these technologies and products can be developed through various approaches, and each company has its own unique implementation strategy, the following is one example of how it can be done.

Prompt to Design combines LLMs with computer vision models (like diffusion or GANs) for layout generation. It’s usually embedded in design tools.

Prompt to UI leans heavily on LLMs (like GPT-4, Claude) to understand prompts and generate production-grade frontend/backend code.

Generative UI needs a Customizable UI library, Memories for storing user context, and a real time GenUI engine that continuously reshapes the UI.

Closing Thoughts

The future of UI creation is unfolding across three powerful paradigms:

Prompt to Design empowers creatives with faster iteration tools.

Prompt to UI bridges product ideas and live code instantly.

Generative UI revolutionizes how people experience software by letting the interface shape itself in real time, uniquely for them.

Each layer builds on the last, with the ultimate goal of delivering smarter, more intuitive, and more human software experiences. Here is a TLDR :

We publish sharp, biweekly insights on Generative UI and the future of interfaces.

If you’re curious about how interfaces are evolving, you can Signup for newsletter.