Why Generating Code for Generative UI is a bad idea

In our previous blog, we took a deep dive into how the C1 API for Generative UI works, explored what makes a complete GenUI system, and how we at Thesys are trying to make GenUI accessible to all. Our efforts to realise this goal are built on top of three pillars: Crayon - our responsive, interactive and modular design system, C1 API - LLM middleware that enables GenUI with just an API call, and GenUI SDK - our offering to translate C1 API calls into Crayon-enabled generative interfaces.

But before we took this curated approach, we had a very fundamental question to answer - what if we use the code-writing abilities of foundational LLMs to directly generate front-end code?

The Allure of Direct Code Generation

LLMs have become remarkably capable at code generation, especially for web development frameworks like React. Platforms like WebDev Arena showcase these impressive capabilities daily. This naturally raises the question: why not utilize this directly to generate interfaces on the fly?

The answer lies in understanding the fundamental limitations that make code generation unsuitable for production Generative UI systems.

Problem #1: Compilation Reliability

LLMs work as excellent assistants when it comes to generating code blocks and editing existing code [5] . While human-supervised code generation is gaining adaptation and getting better in quality with time, an autonomous mode of generating human-aligned code is still a challenge. A study conducted in June 2025 found that most of the generative models (other than large-scale models like GPT 4.1) struggle to compile valid React front-end code [1], with most common errors being related to syntax and undefined symbols in the generated code. This level of uncertainty introduces friction when it comes to adapting the use of LLMs for reliable front-end code generation. Implementing fallback mechanisms in this case to debug the compilation issues introduces high overheads that in many cases might not be feasible [2].

Problem #2: Throughput and Token Inefficiency

The performance gap between code generation and direct UI generation is staggering. LLMs process intent and generate responses token-by-token, and writing code involves extensive syntax that consumes many tokens. Even with state-of-the-art models, increased complexity leads to longer, less efficient code compared to optimized alternatives.

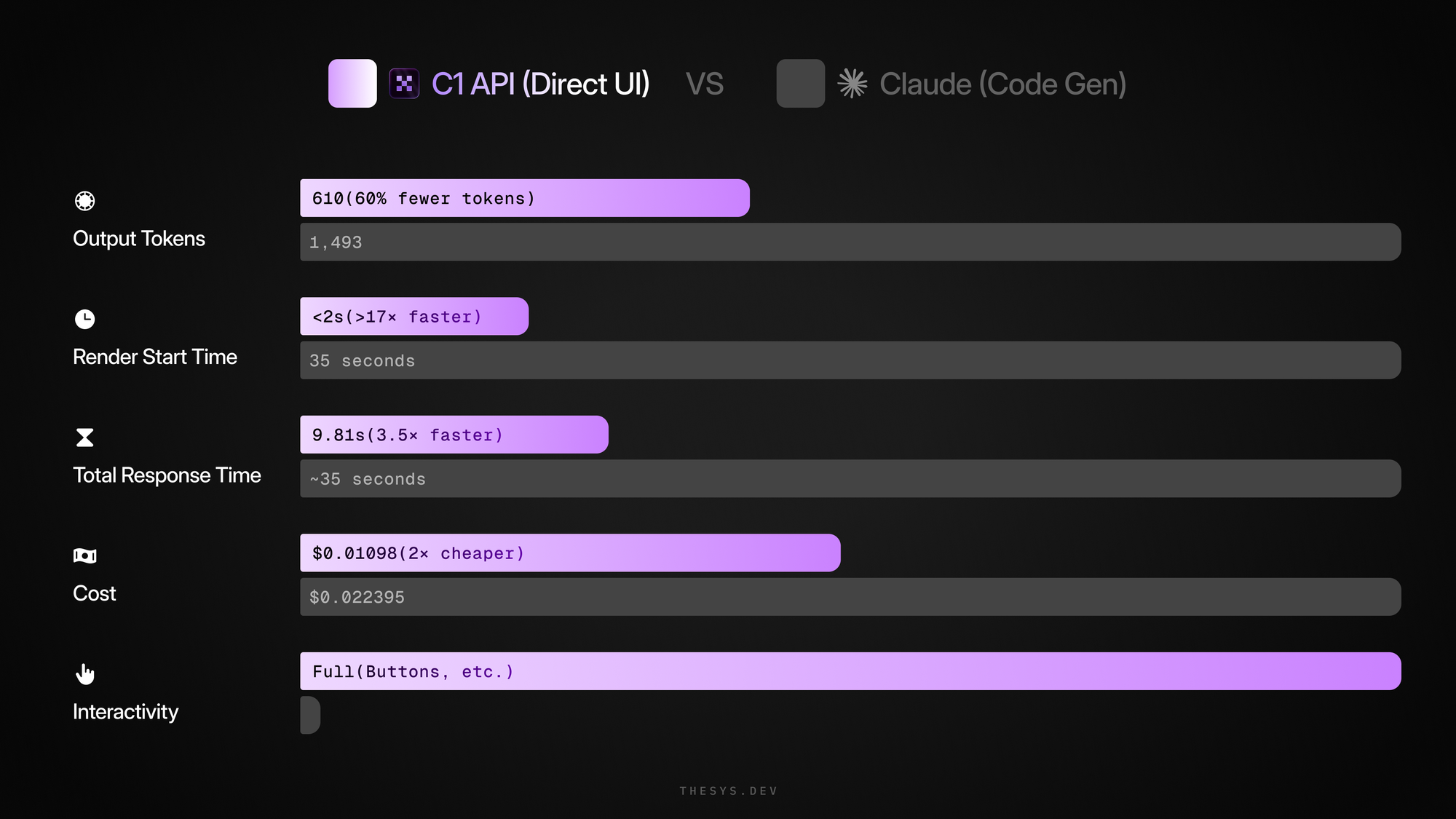

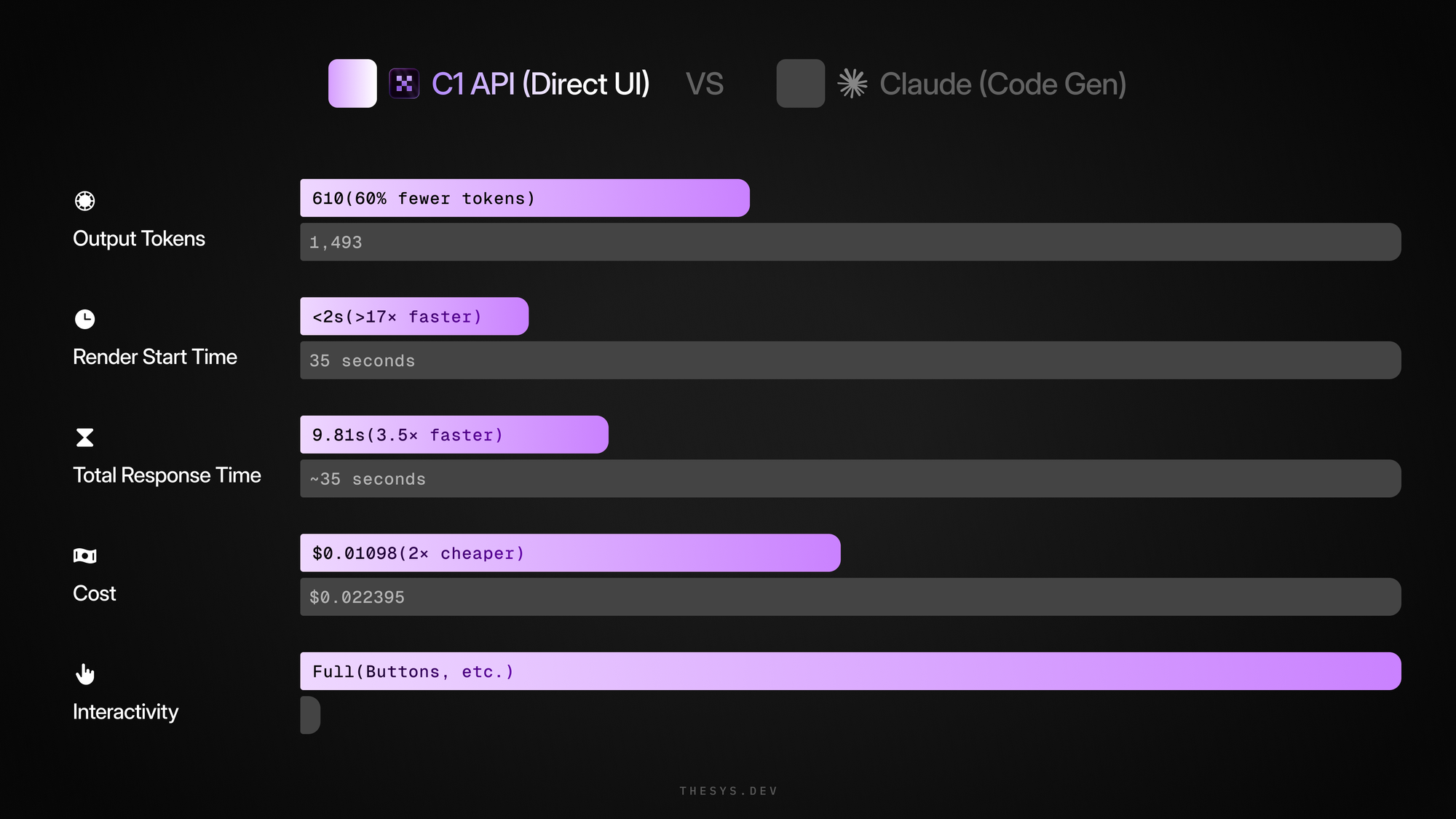

The Numbers Don't Lie

Consider this real-world comparison for the query "How has the US population changed since 1900?":

Claude Desktop (Code Generation):

- 1,493 output tokens consumed

- 35 seconds to render a static graph

- $0.022395 cost

- No interactivity - no buttons or follow-ups

C1 API (Direct UI Generation):

- 610 tokens consumed (60% reduction)

- Under 2 seconds to start rendering (17× faster)

- 9.81 seconds total response time

- $0.01098 cost with C1 Pricing (50% cheaper)

- Full interactivity with responsive components

This isn't just about speed—it's about token economics. Code generation wastes expensive LLM tokens on verbose programming syntax, while direct UI generation uses those same tokens efficiently to create structured components.

Problem #3: Consistency and Design Systems

While large-scale models like GPT 4o and Claude Sonnet 4 are able to consistently generate valid code for compilation [1], it is highly inconsistent when it comes to delivering a predictable and efficient user experience. Imagine this - with each user prompt, the LLM might generate a completely different user interface in terms of the elements used, typography, layouts, themes, element styles, etc. There might be additional requirements for the design system that an LLM might not be able to effectively incorporate. A lot of use cases for generative UI call for generated components that follow a standard design language - adaptive, intent-driven, interactive, responsive and accessible. In addition, the generation should be in-line with the overall theme of the application/webpage.

This information can be translated to the LLM with the use of system prompts, but becomes a hassle as soon as the scale of the project starts to increase. There is only so much that can be translated from visual design constructs into a text-based prompt. Standardization and keeping a consistent design system becomes very important when it comes to Generative UI, as highlighted in our previous blog (link here).

Problem 4: Modularity and Reusability

Effective generation calls for reusing already generated elements, in order to optimise the output latency and overall user experience. This is very tricky to achieve with LLMs producing React code. Component-based design requires an additional layer alongside the LLM which maintains and re-uses components that satisfy the user’s intent. With direct code generation, users are required to give the LLM few-shot prompts which does not scale with increasing use-cases. An alternative to prompting is to maintain your own LLM-compatible design library, which is a mammoth effort in itself.

LLM code generation is heavily influenced by the amount of code the LLM has seen in its training data. Using frameworks that are not popular, or are newer and less used might lead to inefficient code generation with frequent errors. This limits the LLMs to highly popular and well documented languages and frameworks. [4].

The Path Forward: Infrastructure Over Code

Our exploration of LLM-generated code revealed it wasn't as scalable or consistent as hoped. This became the motivation behind our architecture—ensuring consistent user experience with high-quality Crayon components that are modular, reusable, interactive, responsive, and accessible.

The fundamental insight: The future of AI-native applications isn't about automating programming—it's about creating interfaces that adapt to user context as naturally as conversation itself. This requires infrastructure that generates structured UI components directly, not code that must be compiled and executed.

By incorporating these insights into the C1 API and GenUI SDK, we're building the foundation for truly dynamic, context-aware interfaces that respond to user needs in real-time. The goal isn't just better performance—it's unlocking entirely new categories of AI-native applications where the interface itself becomes intelligent and adaptive.

The bottom line: Generating code for Generative UI is solving the wrong problem. The numbers prove it, and the user experience demands better.

References

- [1] Xiao, Jingyu, et al. "Designbench: A comprehensive benchmark for mllm-based front-end code generation." (2025). (https://arxiv.org/abs/2506.06251)

- [2] Ji, Junkai. "Experiments on Code Generation for Web Development using LLMs: A Comparative Study.", (2024). (https://amslaurea.unibo.it/id/eprint/33788/)

- [3] Hosseini, Reza, and Pontus Ryden. "Generative AI in frontend web development: A comparison between AI as a tool and as a sole programmer: Evaluation of ChatGPT’s role within frontend development." (2024). (https://urn.kb.se/resolve?urn=urn:nbn:se:kth:diva-350910)

- [4] Alikhani, Mohammad Hossein. "Generative AI for Front-End Development-Automating Design and Code with GPT-4 and Beyond." Available at SSRN 5117742 (2025). (http://dx.doi.org/10.2139/ssrn.5117742)

- [5] Acher, Mathieu. "A demonstration of end-user code customization using generative ai." Proceedings of the 18th International Working Conference on Variability Modelling of Software-Intensive Systems ,(2024). (https://doi.org/10.1145/3634713.3634732)